Google’s new AI DeepMind can follow commands in 3D games it’s never seen before

has revealed new research highlighting an AI agent that can perform a series of tasks in 3D games that it has never seen before. The team has long been experimenting with AI models that can win at chess, and even learn games. Now, for the first time, according to DeepMind, an AI agent has shown […]

has revealed new research highlighting an AI agent that can perform a series of tasks in 3D games that it has never seen before. The team has long been experimenting with AI models that can win at chess, and even learn games. Now, for the first time, according to DeepMind, an AI agent has shown that it is able to understand a wide range of game worlds and perform tasks in them based on natural language instructions.

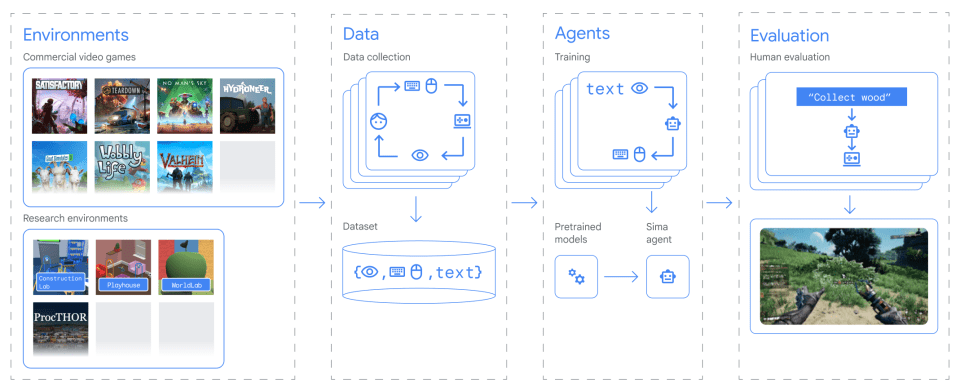

The researchers partnered with studios and publishers like Hello Games (), Smoking Labs () and Coffee stain ( And ) to train the Scalable Instructable Multiworld Agent (SIMA) on nine games. The team also used four research environments, including one built into Unity in which agents must form sculptures using building blocks. This gave SIMA, described as “a general-purpose AI agent for 3D virtual environments”, a range of environments and settings to learn from, with a variety of graphical styles and perspectives (at first and second). the third person).

“Each game in the SIMA portfolio opens up a new interactive world, including a range of skills to learn, from simple navigation and menu usage to mining resources, piloting a spaceship, or crafting of a headset,” the researchers wrote in a blog post. Learning to follow instructions for such tasks in video game worlds could lead to more useful AI agents in any environment, they noted.

The researchers recorded humans playing the games and noted the keyboard and mouse inputs used to perform actions. They used this information to train SIMA, which has “precise image language mapping and a video model that predicts what will happen next on screen.” AI is able to understand a range of environments and perform tasks to achieve a certain goal.

Researchers say SIMA doesn’t need a game‘s source code or API access: it works on commercial versions of a game. It also only needs two inputs: what’s shown on-screen and user instructions. Since it uses the same keyboard and mouse input method as a human, DeepMind says SIMA can work in almost any virtual environment.

The agent is evaluated on hundreds of basic skills that can be performed in about 10 seconds in several categories, including navigation (“turn right”), interacting with objects (“picking up mushrooms”) and Menu-based tasks, such as opening a map or crafting an item. Eventually, DeepMind hopes to be able to direct agents to perform more complex, multi-step tasks based on natural language prompts, such as “find resources and build a camp.”

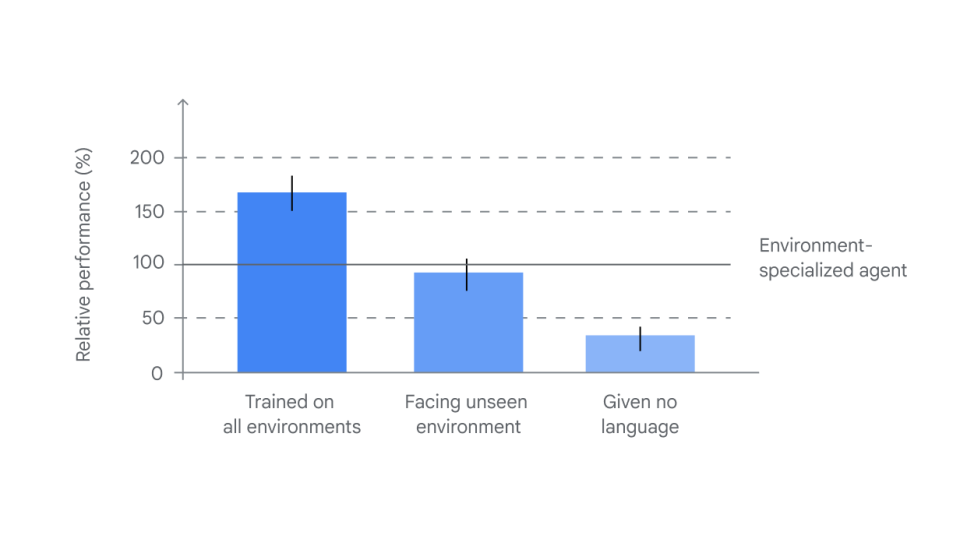

In terms of performance, SIMA performs well on a number of training criteria. The researchers trained the agent in a game (let’s say Goat Simulator 3, for the sake of clarity) and played him the same song, using it as a reference for the performances. A SIMA agent trained on all nine games performed significantly better than an agent trained only on Goat Simulator 3.

What is particularly interesting is that a version of SIMA that was trained in the other eight games and then played the other one performed on average almost as well as an agent that trained only on this last. “This ability to operate in completely new environments highlights SIMA’s ability to generalize beyond its training,” DeepMind said. “This is a promising first result, but further research is needed to make SIMA work at the human level in visible and invisible games.”

However, for SIMA to be truly successful, linguistic input is necessary. In tests where an agent did not receive language training or instructions, they (for example) performed the common action of gathering resources instead of walking where asked. In such cases, SIMA “behaves appropriately but aimlessly,” the researchers said. So, it’s not just about us mere mortals. Artificial intelligence models also sometimes need a little help to do their job properly.

DeepMind notes that this is early-stage research and that the results “show the potential to develop a new wave of generalist, language-focused AI agents.” The team expects AI to become more versatile and generalizable as it is exposed to more training environments. Researchers hope that future versions of the agent will improve SIMA’s understanding and ability to perform more complex tasks. “Ultimately, our research is moving toward more general AI systems and agents that can understand and safely perform a wide range of tasks in a way that is useful to people online and in the real world,” DeepMind said.