OpenAI adds watermarks to ChatGPT images created with DALL-E 3

Images created with DALL-E 3 on ChatGPT and the OpenAI API will now have watermarks. On Tuesday, the company shared the announcement in a job on X, claiming that images “now include metadata using the C2PA specifications.” The tweet may have been deleted C2PAwhich stands for Coalition for Content Provenance and Authenticity, is a technical […]

Images created with DALL-E 3 on ChatGPT and the OpenAI API will now have watermarks.

On Tuesday, the company shared the announcement in a job on X, claiming that images “now include metadata using the C2PA specifications.”

The tweet may have been deleted

C2PAwhich stands for Coalition for Content Provenance and Authenticity, is a technical standard used by Adobe, Microsoft, the BBC and other companies and publishers to combat the prevalence of deepfakes and misinformation “by certifying the source and history (or the provenance) of the multimedia content. “, as explained on the C2PA website.

Bard creates an image of Taylor Swift while Google suggests it won’t

Images generated on ChatGPT and the OpenAI API will contain metadata including the source of the AI tool and the date it was generated. The metadata can be viewed by uploading the image to sites like Content identification information. C2PA metadata adds a slight increase to the file size, but OpenAI claims this will not affect image quality.

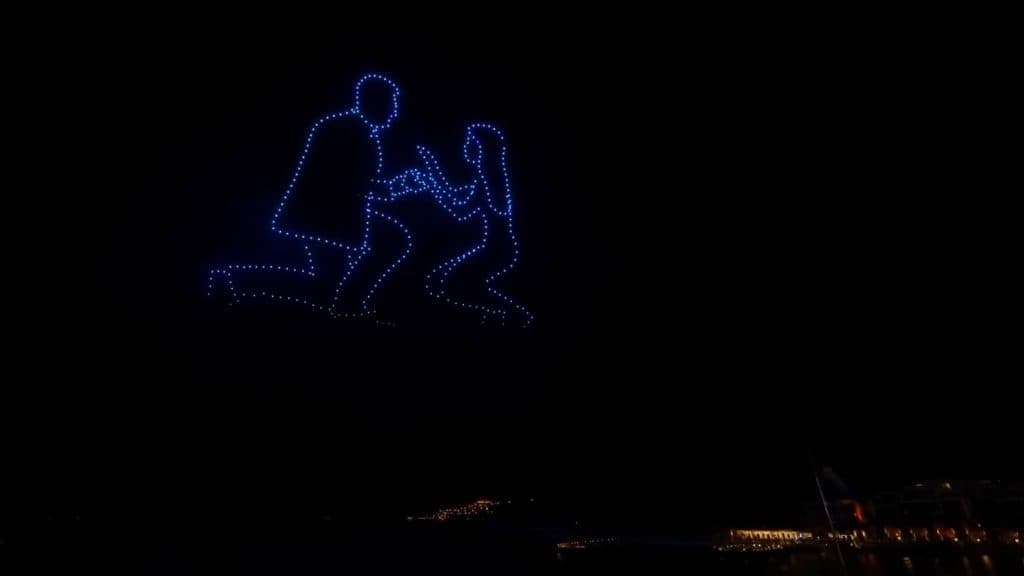

With AI image generation tools like DALL-E 3, Midjourney, Bard, and Copilot, it’s easier than ever to create deepfakes, proliferate misleading information, and post images that infringe. works protected by copyright. Recently, pornographic deepfakes of Taylor Swift went viral, highlighting the need to fact-check images and put in place sufficient safeguards to prevent such harm, especially involving public figures. An executive order from President Biden announced in October 2023 specifically required enforcement of labeling AI-generated content as such. Guidelines for content watermarking are being developed by the Department of Commerce, which has also been tasked with requiring creators of AI foundation models to notify the government of their development.

While watermarking images is a step in the right direction towards verifying AI-generated images, it is not foolproof since metadata can be easily removed. “For example, most social media platforms today remove metadata from uploaded images, and actions such as taking a screenshot can also remove it,” says OpenAI’s policy page detailing the C2PA standard . “Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.”

The OpenAI policy page also clarifies that C2PA in-app watermarks are only applied to image generation, not voice or text generation created via ChatGPT or the OpenAI API.